Applies To:

Show Versions

BIG-IP AAM

- 11.5.10, 11.5.9, 11.5.8, 11.5.7, 11.5.6, 11.5.5, 11.5.4, 11.5.3, 11.5.2, 11.5.1

BIG-IP APM

- 11.5.10, 11.5.9, 11.5.8, 11.5.7, 11.5.6, 11.5.5, 11.5.4, 11.5.3, 11.5.2, 11.5.1

BIG-IP GTM

- 11.5.10, 11.5.9, 11.5.8, 11.5.7, 11.5.6, 11.5.5, 11.5.4, 11.5.3, 11.5.2, 11.5.1

BIG-IP Link Controller

- 11.5.10, 11.5.9, 11.5.8, 11.5.7, 11.5.6, 11.5.5, 11.5.4, 11.5.3, 11.5.2, 11.5.1

BIG-IP Analytics

- 11.5.10, 11.5.9, 11.5.8, 11.5.7, 11.5.6, 11.5.5, 11.5.4, 11.5.3, 11.5.2, 11.5.1

BIG-IP LTM

- 11.5.10, 11.5.9, 11.5.8, 11.5.7, 11.5.6, 11.5.5, 11.5.4, 11.5.3, 11.5.2, 11.5.1

BIG-IP AFM

- 11.5.10, 11.5.9, 11.5.8, 11.5.7, 11.5.6, 11.5.5, 11.5.4, 11.5.3, 11.5.2, 11.5.1

BIG-IP PEM

- 11.5.10, 11.5.9, 11.5.8, 11.5.7, 11.5.6, 11.5.5, 11.5.4, 11.5.3, 11.5.2, 11.5.1

BIG-IP ASM

- 11.5.10, 11.5.9, 11.5.8, 11.5.7, 11.5.6, 11.5.5, 11.5.4, 11.5.3, 11.5.2, 11.5.1

Configuring Layer 3 nPath Routing

Overview: Layer 3 nPath routing

Using Layer 3 nPath routing, you can load balance traffic over a routed topology in your data center. In this deployment, the server sends its responses directly back to the client, even when the servers, and any intermediate routers, are on different networks. This routing method uses IP encapsulation to create a uni-directional outbound tunnel from the server pool to the server.

You can also override the encapsulation for a specified pool member, and either remove that pool member from any encapsulation or specify a different encapsulation protocol. The available encapsulation protocols are IPIP and GRE.

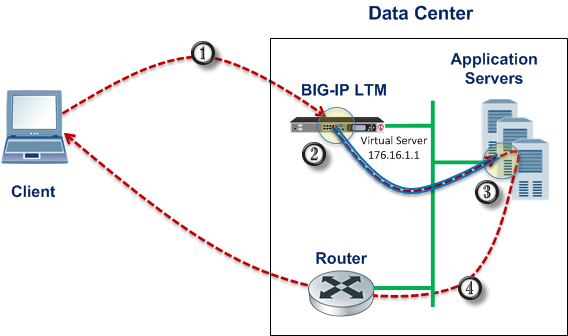

Example of a Layer 3 routing configuration

This illustration shows the path of a packet in a deployment that uses Layer 3 nPath routing through a tunnel.

- The client sends traffic to a Fast L4 virtual server.

- The pool encapsulates the packet and sends it through a tunnel to the server.

- The server removes the encapsulation header and returns the packet to the network.

- The target application receives the original packet, processes it, and responds directly to the client.

Configuring Layer 3 nPath routing using tmsh

Configuring a Layer 3 nPath monitor using tmsh

Layer 3 nPath routing example

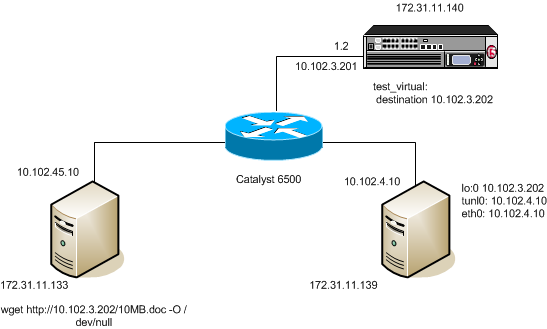

The following illustration shows one example of an L3 nPath routing configuration in a network.

Example of a Layer 3 routing configuration

The following examples show the configuration code that supports the illustration.

# ifconfig eth0 inet 10.102.45.10 netmask 255.255.255.0 up # route add –net 10.0.0.0 netmask 255.0.0.0 gw 10.102.45.1

# - create node pointing to server's ethernet address

# ltm node 10.102.4.10 {

# address 10.102.4.10

# }

# - create transparent monitor

# ltm monitor tcp t.ipip {

# defaults-from tcp

# destination 10.102.3.202:http

# interval 5

# time-until-up 0

# timeout 16

# transparent enabled

# }

# - create pool with ipip profile

# ltm pool ipip.pool {

# members {

# 10.102.4.10:any { - real server's ip address

# address 10.102.4.10

# }

# }

# monitor t.ipip - transparent monitor

# profiles {

# ipip

# }

# }

# - create FastL4 profile with PVA disabled

# ltm profile fastl4 fastL4.ipip {

# app-service none

# pva-acceleration none

# }

# - create FastL4 virtual with custom FastL4 profile from previous step

# ltm virtual test_virtual {

# destination 10.102.3.202:any - server's loopback address

# ip-protocol tcp

# mask 255.255.255.255

# pool ipip.pool - pool with ipip profile

# profiles {

# fastL4.ipip { } - custom fastL4 profile

# }

# translate-address disabled - translate address disabled

# translate-port disabled

# vlans-disabled

# }

Linux DSR server configuration:

# modprobe ipip # ifconfig tunl0 10.102.4.10 netmask 255.255.255.0 up # ifconfig lo:0 10.102.3.202 netmask 255.255.255.255 -arp up # echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore # echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce # echo 0 >/proc/sys/net/ipv4/conf/tunl0/rp_filter