Applies To:

Show Versions

BIG-IP AAM

- 11.6.5, 11.6.4, 11.6.3, 11.6.2, 11.6.1

About HTTP compression profiles

HTTP compression reduces the amount of data to be transmitted, thereby significantly reducing bandwidth usage. All of the tasks needed to configure HTTP compression on the BIG-IP system, as well as the compression software itself, are centralized on the BIG-IP system. The tasks needed to configure HTTP compression for objects in an Application Acceleration Manager module policy node are available in the Application Acceleration Manager, but an HTTP compression profile must be enabled for them to function.

When configuring the BIG-IP system to compress data, you can:

- Configure the system to include or exclude certain types of data.

- Specify the levels of compression quality and speed that you want.

You can enable the HTTP compression option by setting the URI Compression or the Content Compression setting of the HTTP Compression profile to URI List or Content List, respectively. This causes the BIG-IP system to compress HTTP content for any responses in which the values that you specify in the URI List or Content List settings of an HTTP profile match the values of the Request-URI or Content-Type response headers.

Exclusion is useful because some URI or file types might already be compressed. Using CPU resources to compress already-compressed data is not recommended because the cost of compressing the data usually outweighs the benefits. Examples of regular expressions that you might want to specify for exclusion are .*\.pdf, .*\.gif, or .*\.html.

HTTP Compression profile options

You can use an HTTP Compression profile alone, or with the BIG-IP Application Acceleration Manager, to reduce the amount of data to be transmitted, thereby significantly reducing bandwidth usage. The tasks needed to configure HTTP compression for objects in an Application Acceleration Manager policy node are available in the Application Acceleration Manager, but an HTTP Compression profile must be enabled for them to function.

About Web Acceleration profiles

When used by the BIG-IP system without an associated Application Acceleration Manager application, the Web Acceleration profile uses basic default acceleration.

When used with the Application Acceleration Manager, the Web Acceleration profile includes an ordered list of associated Application Acceleration Manager applications, each of which defines the host names, IP addresses, and policy that is applied to a request that matches the specified host name or IP address. You can enable one or more Application Acceleration Manager applications in a Web Acceleration profile.

A Web Acceleration profile with multiple Application Acceleration Manager applications that target different host names can be handled by the same virtual server, or by multiple virtual servers, while simultaneously allowing each application to apply a different policy to matching traffic.

The Application Acceleration Manager is enabled by configuring an Application Acceleration Manager application and enabling it in the Web Acceleration profile.

Web Acceleration profile settings

This table describes the Web Acceleration profile configuration settings and default values.

| Setting | Value | Description |

|---|---|---|

| Name | No default | Specifies the name of the profile. |

| Parent Profile | Selected predefined or user-defined profile | Specifies the selected predefined or user-defined profile. |

| Partition / Path | Common | Specifies the partition and path to the folder for the profile objects. |

| Cache Size | 100 |

Without a provisioned BIG-IP Application Acceleration Manager, this setting specifies the maximum size in megabytes (MB) reserved for the cache. When the cache reaches the maximum size, the system starts removing the oldest entries. With a provisioned Application Acceleration Manager, this setting defines the minimum reserved cache size. The maximum size of the minimum reserved cache is 64 GB (with provisioned cache availability). An allocation of 15 GB is practical for most implementations. The total available cache includes the minimum reserved cache and a dynamic cache, used as necessary when the minimum reserved cache is exceeded, for a total cache availability of 256 GB. |

| Maximum Entries | 10000 | Specifies the maximum number of entries that can be in the cache. |

| Maximum Age | 3600 | Specifies how long in seconds that the system considers the cached content to be valid. |

| Minimum Object Size | 500 | Specifies the smallest object in bytes that the system considers eligible for caching. |

| Maximum Object Size | 50000 | Specifies the largest object in bytes that the system considers eligible for caching. |

| URI Caching | Not Configured | Specifies whether the system retains or excludes certain Uniform Resource Identifiers (URIs) in the cache. The process forces the system either to cache URIs that typically are ineligible for caching, or to not cache URIs that typically are eligible for caching. |

| URI List | No default value | Specifies the URIs that the system either includes in or excludes from caching.

Note: You can use regular expressions to specify URIs in accordance with

BIG-IP supported meta characters.

|

| Ignore Headers | All | Specifies how the system processes client-side

Cache-Control headers when caching is enabled.

|

| Insert Age Header | Enabled | Specifies, when enabled, that the system inserts Date and Age headers in the cached entry. The Date header contains the current date and time on the BIG-IP system. The Age header contains the length of time that the content has been in the cache. |

| Aging Rate | 9 | Specifies how quickly the system ages a cache entry. The aging rate ranges from 0 (slowest aging) to 10 (fastest aging). |

| AM Applications | No default | Lists enabled Application Acceleration Manager applications in the Enabled field and available applications in the Available field. |

Meta characters

This table describes the meta characters that are supported by the BIG-IP for pattern matching.

| Meta character | Description | Example |

|---|---|---|

| . | Matches any single character. | |

| ^ | Matches the beginning of the line in a regular expression. The BIG-IP assumes that the beginning and end of line meta characters exist for every regular expression it sees. | |

| $ | Matches the end of the line. The BIG-IP assumes that the beginning and end of line meta characters exist for every regular expression it sees. |

The expression G.*P.* matches:

A pattern starting with the * character is the same as using .* For example, the BIG-IP interprets the following two expressions as identical.

|

| * | Matches zero or more of the patterns that precede it. | |

| + | Matches one or more of the patterns that precede it. |

The expression G.+P.* matches:

Do not begin a pattern with the + character. For example, do not use +Plan. Instead, use .+Plan. |

| ? | Matches none, or one of the patterns that precede it. |

The expression G.?P.* matches:

Do not begin a pattern with the ? character. For example, do not use ?Plan. Instead, use .?Plan. |

| [...] | Matches a set of characters. You can list the characters in the set using a string made of the characters to match. | The expression C[AHR] matches:

You can also provide a range of characters by using a dash. For example, the expression AA[0-9]+ matches:

It does not, however, match AAB2. To match any alphanumeric character, both upper-case and lower-case, use the expression [a-zA-Z0-9]. |

| [^...] | Matches any character not in the set. Just as with the character, [...], you can specify the individual characters, or a range of characters by using a dash (-). |

The expression C[^AHR].* matches:

The expression C[^AHR].*, however, does not match:

|

| (...) | Matches the regular expression contained inside the parenthesis, as a group. | The expression AA(12)+CV matches:

|

| exp1 exp2 | Matches either exp1 or exp2, where exp1 and exp2 are regular expressions. | The expression AA([de]12|[zy]13)CV matches:

|

Web Acceleration Profile statistics description

This topic provides a description of Web Acceleration Profile statistics produced in tmsh.

Viewing Web Acceleration profile statistics

Statistics for the Web Acceleration Profile can be viewed in tmsh by using the following command.

tmsh show /ltm profile web-acceleration <profile_name>Each statistic is described in the following tables.

| Statistic | Description |

|---|---|

| Virtual Server | The name of the associated virtual server. |

| Statistic | Description |

|---|---|

| Cache Size (in Bytes) |

The cache size for small objects (<4k) in cache and metastor tracking data. |

| Total Cached Items |

The total number of objects cached in the local cache for each TMM. |

| Total Evicted Items |

The total number of small objects (<4k) and metastor tracking data entities evicted from cache. |

| Inter-Stripe Size (in Bytes) |

The inter-stripe cache size for small objects (<4k) in cache and metastor tracking data. |

| Inter-Stripe Cached Items |

The total number of objects in the inter-stripe caches for each TMM. |

| Inter-Stripe Evicted Items |

The total number of small objects (<4k) and metastor tracking data entities evicted from the inter-stripe cache for each TMM. |

| Statistic | Description |

|---|---|

| Hits | The total number of cache hits. |

| Misses (Cacheable) | The number of cache misses for objects that can otherwise be cached. |

| Misses (Total) | The number of cache misses for all objects. |

| Inter-Stripe Hits | The number of inter-stripe cache hits for each TMM. |

| Inter-Stripe Misses | The number of inter-stripe cache misses for each TMM. |

| Remote Hits |

For LTM only, the number of cache hits for owner TMMs. |

| Remote Misses | The number of cache misses for owner TMMs. |

About iSession profiles

The iSession profile tells the system how to optimize traffic. Symmetric optimization requires an iSession profile at both ends of the iSession connection. The system-supplied parent iSession profile isession, is appropriate for all application traffic, and other iSession profiles have been pre-configured for specific applications. The name of each pre-configured iSession profile indicates the application for which it was configured, such as isession-cifs.

When you configure the iSession local endpoint on the Quick Start screen, the system automatically associates the system-supplied iSession profile isession with the iSession listener isession-virtual it creates for inbound traffic.

You must associate an iSession profile with any virtual server you create for a custom optimized application for outbound traffic, and with any iSession listener you create for inbound traffic.

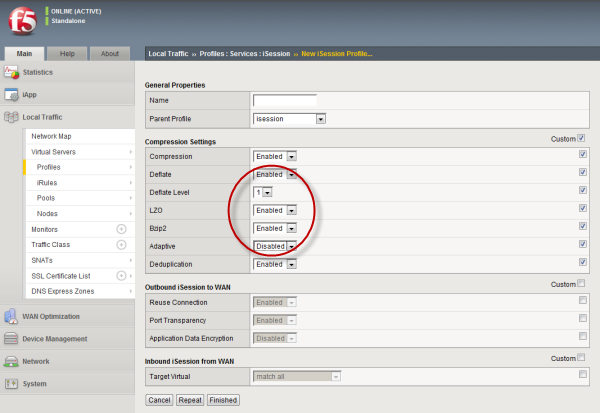

Screen capture showing compression settings

The following screen capture shows the pertinent compression settings.

iSession profile screen with compression settings emphasized

iSession profile screen with compression settings emphasized

About CIFS traffic optimization

Common Internet File System (CIFS) is a remote file access protocol that forms the basis of Microsoft Windows file sharing. Various CIFS implementations (for example, Samba) are also available on other operating systems such as Linux. CIFS is the protocol most often used for transferring files over the network. Using symmetric optimization, the BIG-IP system can optimize CIFS traffic, resulting in faster performance for transferring CIFS files, opening Microsoft applications, and saving files. CIFS optimization is particularly useful when two offices that are located far apart frequently need to share and exchange files.

About MAPI optimization

Messaging Application Program Interface (MAPI) is the email protocol that Microsoft Exchange Server and Outlook clients use to exchange messages. Optimization of MAPI traffic across the WAN requires a virtual server for each Exchange-based server so that the BIG-IP system can use the IP addresses of the Exchange-based servers to locate MAPI traffic.

About TCP profiles

TCP profiles are configuration tools that help you to manage TCP network traffic. Many of the configuration settings of TCP profiles are standard SYSCTL types of settings, while others are unique to the BIG-IP system.

TCP profiles are important because they are required for implementing certain types of other profiles. For example, by implementing TCP, HTTP, Rewrite, HTML, and OneConnect profiles, along with a persistence profile, you can take advantage of various traffic management features, such as:

- Content spooling, to reduce server load

- OneConnect, to pool idle server-side connections

- Layer 7 session persistence, such as hash or cookie persistence

- iRules for managing HTTP traffic

- HTTP data compression

- HTTP pipelining

- URI translation

- HTML content modification

- Rewriting of HTTP redirections

The BIG-IP system includes several pre-configured TCP profiles that you can use as is. In addition to the default tcp profile, the system includes TCP profiles that are pre-configured to optimize LAN and WAN traffic, as well as traffic for mobile users. You can use the pre-configured profiles as is, or you can create a custom profile based on a pre-configured profile and then adjust the values of the settings in the profiles to best suit your particular network environment.

About tcp-lan-optimized profile settings

The tcp-lan-optimized profile is a pre-configured profile type that can be associated with a virtual server. In cases where the BIG-IP virtual server is load balancing LAN-based or interactive traffic, you can enhance the performance of your local-area TCP traffic by using the tcp-lan-optimized profile.

If the traffic profile is strictly LAN-based, or highly interactive, and a standard virtual server with a TCP profile is required, you can configure your virtual server to use the tcp-lan-optimized profile to enhance LAN-based or interactive traffic. For example, applications producing an interactive TCP data flow, such as SSH and TELNET, normally generate a TCP packet for each keystroke. A TCP profile setting such as Slow Start can introduce latency when this type of traffic is being processed. By configuring your virtual server to use the tcp-lan-optimized profile, you can ensure that the BIG-IP system delivers LAN-based or interactive traffic without delay.

A tcp-lan-optimized profile is similar to a TCP profile, except that the default values of certain settings vary, in order to optimize the system for LAN-based traffic.

You can use the tcp-lan-optimized profile as is, or you can create another custom profile, specifying the tcp-lan-optimized profile as the parent profile.

About tcp-mobile-optimized profile settings

The tcp-mobile-optimized profile is a pre-configured profile type, for which the default values are set to give better performance to service providers' 3G and 4G customers. Specific options in the pre-configured profile are set to optimize traffic for most mobile users, and you can tune these settings to fit your network. For files that are smaller than 1 MB, this profile is generally better than the mptcp-mobile-optimized profile. For a more conservative profile, you can start with the tcp-mobile-optimized profile, and adjust from there.

This list provides guidance for relevant settings

- Set the Proxy Buffer Low to the Proxy Buffer High value minus 64 KB. If the Proxy Buffer High is set to less than 64K, set this value at 32K.

- The size of the Send Buffer ranges from 64K to 350K, depending on network characteristics. If you enable the Rate Pace setting, the send buffer can handle over 128K, because rate pacing eliminates some of the burstiness that would otherwise exist. On a network with higher packet loss, smaller buffer sizes perform better than larger. The number of loss recoveries indicates whether this setting should be tuned higher or lower. Higher loss recoveries reduce the goodput.

- Setting the Keep Alive Interval depends on your fast dormancy goals. The default setting of 1800 seconds allows the phone to enter low power mode while keeping the flow alive on intermediary devices. To prevent the device from entering an idle state, lower this value to under 30 seconds.

- The Congestion Control setting includes delay-based and hybrid algorithms, which might better address TCP performance issues better than fully loss-based congestion control algorithms in mobile environments. The Illinois algorithm is more aggressive, and can perform better in some situations, particularly when object sizes are small. When objects are greater than 1 MB, goodput might decrease with Illinois. In a high loss network, Illinois produces lower goodput and higher retransmissions. The Woodside algorithm relies on timestamps to determine transmission. If timestamps are not available in your network, avoid using Woodside.

- For 4G LTE networks, specify the Packet Loss Ignore Rate as 0. For 3G networks, specify 2500. When the Packet Loss Ignore Rate is specified as more than 0, the number of retransmitted bytes and receives SACKs might increase dramatically.

- For the Packet Loss Ignore Burst setting, specify within the range of 6-12, if the Packet Loss Ignore Rate is set to a value greater than 0. A higher Packet Loss Ignore Burst value increases the chance of unnecessary retransmissions.

- For the Initial Congestion Window Size setting, round trips can be reduced when you increase the initial congestion window from 0 to 10 or 16.

- Enabling the Rate Pace setting can result in improved goodput. It reduces loss recovery across all congestion algorithms, except Illinois. The aggressive nature of Illinois results in multiple loss recoveries, even with rate pacing enabled.

A tcp-mobile-optimized profile is similar to a TCP profile, except that the default values of certain settings vary, in order to optimize the system for mobile traffic.

You can use the tcp-mobile-optimized profile as is, or you can create another custom profile, specifying the tcp-mobile-optimized profile as the parent profile.

About mptcp-mobile-optimized profile settings

The mptcp-mobile-optimized profile is a pre-configured profile type for use in reverse proxy and enterprise environments for mobile applications that are front-ended by a BIG-IP system. This profile provides a more aggressive starting point than the tcp-mobile-optimized profile. It uses newer congestion control algorithms and a newer TCP stack, and is generally better for files that are larger than 1 MB. Specific options in the pre-configured profile are set to optimize traffic for most mobile users in this environment, and you can tune these settings to accommodate your network.

The enabled Multipath TCP (MPTCP) option provides more bandwidth and higher network utilization. It allows multiple client-side flows to connect to a single server-side flow. MPTCP automatically and quickly adjusts to congestion in the network, moving traffic away from congested paths and toward uncongested paths.

The Congestion Control setting includes delay-based and hybrid algorithms, which may better address TCP performance issues better than fully loss-based congestion control algorithms in mobile environments. Refer to the online help descriptions for assistance in selecting the setting that corresponds to your network conditions.

The enabled Rate Pace option mitigates bursty behavior in mobile networks and other configurations. It can be useful on high latency or high BDP (bandwidth-delay product) links, where packet drop is likely to be a result of buffer overflow rather than congestion.

An mptcp-mobile-optimized profile is similar to a TCP profile, except that the default values of certain settings vary, in order to optimize the system for mobile traffic.

You can use the mptcp-mobile-optimized profile as is, or you can create another custom profile, specifying the mptcp-mobile-optimized profile as the parent profile.

About tcp-wan-optimized profile settings

The tcp-wan-optimized profile is a pre-configured profile type. In cases where the BIG-IP system is load balancing traffic over a WAN link, you can enhance the performance of your wide-area TCP traffic by using the tcp-wan-optimized profile.

If the traffic profile is strictly WAN-based, and a standard virtual server with a TCP profile is required, you can configure your virtual server to use a tcp-wan-optimized profile to enhance WAN-based traffic. For example, in many cases, the client connects to the BIG-IP virtual server over a WAN link, which is generally slower than the connection between the BIG-IP system and the pool member servers. By configuring your virtual server to use the tcp-wan-optimized profile, the BIG-IP system can accept the data more quickly, allowing resources on the pool member servers to remain available. Also, use of this profile can increase the amount of data that the BIG-IP system buffers while waiting for a remote client to accept that data. Finally, you can increase network throughput by reducing the number of short TCP segments that the BIG-IP system sends on the network.

A tcp-wan-optimized profile is similar to a TCP profile, except that the default values of certain settings vary, in order to optimize the system for WAN-based traffic.

You can use the tcp-wan-optimized profile as is, or you can create another custom profile, specifying the tcp-wan-optimized profile as the parent profile.

About HTTP2 (experimental) profiles

You can configure the BIG-IP Acceleration HTTP/2 profile to provide gateway functionality for HTTP 2.0 traffic, minimizing the latency of requests by multiplexing streams and compressing headers.

A client initiates an HTTP/2 request to the BIG-IP system, the HTTP/2 virtual server receives the request on port 443, and sends the request to the appropriate server. When the server provides a response, the BIG-IP system compresses and caches it, and sends the response to the client.

Summary of HTTP/2 profile functionality

By using the HTTP/2 profile, the BIG-IP system provides the following functionality for HTTP/2 requests.

- Creating concurrent streams for each connection.

- You can specify the maximum number of concurrent HTTP requests that are accepted on a HTTP/2 connection. If this maximum number is exceeded, the system closes the connection.

- Limiting the duration of idle connections.

- You can specify the maximum duration for an idle HTTP/2 connection. If this maximum duration is exceeded, the system closes the connection.

- Enabling a virtual server to process HTTP/2 requests.

- You can configure the HTTP/2 profile on the virtual server to receive HTTP, SPDY, and HTTP/2 traffic, or to receive only HTTP/2 traffic, based in the activation mode you select. (Note the HTTP/2 profile to receive only HTTP/2 traffic is primarily intended for troubleshooting.)

- Inserting a header into the request.

- You can insert a header with a specific name into the request. The default name for the header is X-HTTP/2.

About HTTP/2 profiles

The BIG-IP system's Acceleration functionality includes an HTTP/2 profile type that you can use to manage HTTP/2 traffic, improving the efficiency of network resources while reducing the perceived latency of requests and responses. The Acceleration HTTP/2 profile enables you to achieve these advantages by multiplexing streams and compressing headers with Transport Layer Security (TLS) or Secure Sockets Layer (SSL) security.

The HTTP/2 protocol uses a binary framing layer that defines a frame type and purpose in managing requests and responses. The binary framing layer determines how HTTP messages are encapsulated and transferred between the client and server, a significant benefit of HTTP 2.0 when compared to earlier versions.

All HTTP/2 communication occurs by means of a connection with bidirectional streams. Each stream includes messages, consisting of one or more frames, that can be interleaved and reassembled using the embedded stream identifier within each frame's header. The HTTP/2 profile enables you to specify a maximum frame size and write size, which controls the total size of combined data frames, to improve network utilization.

Multiplexing streams

You can use the HTTP/2 profile to multiplex streams (interleaving and reassembling the streams), by specifying a maximum number of concurrent streams permitted for a single connection. Also, because multiplexing streams on a single TCP connection compete for shared bandwidth, you can use the profile's Priority Handling settings to configure stream prioritization and define the relative order of delivery. For example, a Strict setting processes higher priority streams to completion before processing lower priority streams; whereas, a Fair setting allows higher priority streams to use more bandwidth than lower priority streams, without completely blocking the lower priority streams.

Additionally, you can specify the way that the HTTP/2 profile controls the flow of streams. The Receive Window setting allows HTTP/2 to stall individual upload streams, as needed. For example, if the BIG-IP system is unable to process a slow stream on a connection, but is able to process other streams on the connection, it can use the Receive Window setting to specify a frame size for the slow stream, thus delaying that upload stream until the size is met and the receiver is able to process it, while concurrently proceeding to process frames for another stream.

Compressing headers

When you configure the HTTP/2 profile's Header Table Size setting, you can compress HTTP headers to conserve bandwidth. Compressing HTTP headers reduces the object size, which reduces required bandwidth. For example, you can specify a larger table value for better compression, but at the expense of using more memory.

HTTP/2 (experimental) profile settings

This table provides descriptions of the HTTP/2 profile settings.

| Setting | Default | Description |

|---|---|---|

| Name | Specifies the name of the HTTP/2 profile. | |

| Parent Profile | http2 | Specifies the profile that you want to use as the parent profile. Your new profile inherits all settings and values from the parent profile specified. |

| Concurrent Streams Per Connection | 10 | Specifies the number of concurrent requests allowed to be outstanding on a single HTTP/2 connection. |

| Connection Idle Timeout | 300 | Specifies the number of seconds an HTTP/2 connection is left open idly before it is closed. |

| Insert Header | Disabled | Specifies whether an HTTP header that indicates the use of HTTP/2 is inserted into the request sent to the origin web server. |

| Insert Header Name | X-HTTP/2 | Specifies the name of the HTTP header controlled by the Insert Header Name setting. |

| Activation Modes | Select Modes | Specifies how a connection is established as a HTTP/2 connection. |

| Selected Modes | ALPN NPN | Used only with an Activation Modes selection of Select Modes, specifies the extension, ALPN for HTTP/2 or NPN for SPDY, used in the HTTP/2 profile. The order of the extensions in the Selected Modes Enabled list ranges from most preferred (first) to least preferred (last). Clients typically use the first supported extension. At least one HTTP/2 mode must be included in the Enabled list. The values ALPN and NPN specify that the TLS Application Layer Protocol Negotiation (ALPN) and Next Protocol Negotiation (NPN) will be used to determine whether HTTP/2 or SPDY should be activated. Clients that use TLS, but only support HTTP will work as if HTTP/2 is not present. The value Always specifies that all connections function as HTTP/2 connections. Selecting Always in the Activation Mode list is primarily intended for troubleshooting. |

| Priority Handling | Strict | Specifies how the HTTP/2 profile handles priorities of concurrent streams within the same connection. Selecting Strict processes higher priority streams to completion before processing lower priority streams. Selecting Fair enables higher priority streams to use more bandwidth than lower priority streams, without completely blocking the lower priority streams. |

| Receive Window | 32 | Specifies the receive window, which is HTTP/2 protocol functionality that controls flow, in KB. The receive window allows the HTTP/2 protocol to stall individual upload streams when needed. |

| Frame Size | 2048 | Specifies the size of the data frames, in bytes, that the HTTP/2 protocol sends to the client. Larger frame sizes improve network utilization, but can affect concurrency. |

| Write Size | 16384 | Specifies the total size of combined data frames, in bytes, that the HTTP/2 protocol sends in a single write function. This setting controls the size of the TLS records when the HTTP/2 protocol is used over Secure Sockets Layer (SSL). A large write size causes the HTTP/2 protocol to buffer more data and improves network utilization. |

| Header Table Size | 4096 | Specifies the size of the header table, in KB. The HTTP/2 protocol compresses HTTP headers to save bandwidth. A larger table size allows better compression, but requires more memory. |

About SPDY profiles

You can use the BIG-IP the BIG-IP system SPDY (pronounced "speedy") profile to minimize latency of HTTP requests by multiplexing streams and compressing headers. When you assign a SPDY profile to an HTTP virtual server, the HTTP virtual server informs clients that a SPDY virtual server is available to respond to SPDY requests.

When a client sends an HTTP request, the HTTP virtual server manages the request as a standard HTTP request. It receives the request on port 80, and sends the request to the appropriate server. When the server provides a response, the BIG-IP system inserts an HTTP header into the response (to inform the client that a SPDY virtual server is available to handle SPDY requests), compresses and caches it, and sends the response to the client.

A client that is enabled to use the SPDY protocol sends a SPDY request to the BIG-IP system, the SPDY virtual server receives the request on port 443, converts the SPDY request into an HTTP request, and sends the request to the appropriate server. When the server provides a response, the BIG-IP system converts the HTTP response into a SPDY response, compresses and caches it, and sends the response to the client.

Summary of SPDY profile functionality

By using the SPDY profile, the BIG-IP system provides the following functionality for SPDY requests.

- Creating concurrent streams for each connection.

- You can specify the maximum number of concurrent HTTP requests that are accepted on a SPDY connection. If this maximum number is exceeded, the system closes the connection.

- Limiting the duration of idle connections.

- You can specify the maximum duration for an idle SPDY connection. If this maximum duration is exceeded, the system closes the connection.

- Enabling a virtual server to process SPDY requests.

- You can configure the SPDY profile on the virtual server to receive both HTTP and SPDY traffic, or to receive only SPDY traffic, based in the activation mode you select. (Note that setting this to receive only SPDY traffic is primarily intended for troubleshooting.)

- Inserting a header into the response.

- You can insert a header with a specific name into the response. The default name for the header is X-SPDY.

About NTLM profiles

NT LAN Manager (NTLM) is an industry-standard technology that uses an encrypted challenge/response protocol to authenticate a user without sending the user's password over the network. Instead, the system requesting authentication performs a calculation to prove that the system has access to the secured NTLM credentials. NTLM credentials are based on data such as the domain name and user name, obtained during the interactive login process.

The NTLM profile within BIG-IP the BIG-IP system optimizes network performance when the system is processing NT LAN Manager traffic. When both an NTLM profile and a OneConnect profile are associated with a virtual server, the local traffic management system can take advantage of server-side connection pooling for NTLM connections.

How does the NTLM profile work?

When the NTLM profile is associated with a virtual server and the server replies with the HTTP 401 Unauthorized HTTP response message, the NTLM profile inserts a cookie, along with additional profile options, into the HTTP response. The information is encrypted with a user-supplied passphrase and associated with the serverside flow. Further client requests are allowed to reuse this flow only if they present the NTLMConnPool cookie containing the matching information. By using a cookie in the NTLM profile, the BIG-IP system does not need to act as an NTLM proxy, and returning clients do not need to be re-authenticated.

The NTLM profile works by parsing the HTTP request containing the NTLM type 3 message and securely storing the following pieces of information (aside from those which are disabled in the profile):

- User name

- Workstation name

- Target server name

- Domain name

- Cookie previously set (cookie name supplied in the profile)

- Source IP address

With the information safely stored, the BIG-IP system can then use the data as a key when determining which clientside requests to associate with a particular serverside flow. You can configure this using the NTLM profile options. For example, if a server's resources can be openly shared by all users in that server's domain, then you can enable the Key By NTLM Domain setting, and all serverside flows from the users of the same domain can be pooled for connection reuse without further authentication. Or, if a server's resources can be openly shared by all users originating from a particular IP address, then you can enable the Key By Client IP Address setting and all serverside flows from the same source IP address can be pooled for connection reuse.

About OneConnect profiles

The OneConnect profile type implements the BIG-IP system's OneConnect feature. This feature can increase network throughput by efficiently managing connections created between the BIG-IP system and back-end pool members. You can use the OneConnect feature with any TCP-based protocol, such as HTTP or RTSP.

How does OneConnect work?

The OneConnect feature works with request headers to keep existing server-side connections open and available for reuse by other clients. When a client makes a new connection to a virtual server configured with a OneConnect profile, the BIG-IP system parses the request, selects a server using the load-balancing method defined in the pool, and creates a connection to that server. When the client's initial request is complete, the BIG-IP system temporarily holds the connection open and makes the idle TCP connection to the pool member available for reuse.

When another connection is subsequently initiated to the virtual server, if an existing server-side flow to the pool member is open and idle, the BIG-IP system applies the OneConnect source mask to the IP address in the request to determine whether the request is eligible to reuse the existing idle connection. If the request is eligible, the BIG-IP system marks the connection as non-idle and sends a client request over that connection. If the request is not eligible for reuse, or an idle server-side flow is not found, the BIG-IP system creates a new server-side TCP connection and sends client requests over the new connection.

About client source IP addresses

The standard address translation mechanism on the BIG-IP system translates only the destination IP address in a request and not the source IP address (that is, the client node’s IP address). However, when the OneConnect feature is enabled, allowing multiple client nodes to re-use a server-side connection, the source IP address in the header of each client node’s request is always the IP address of the client node that initially opened the server-side connection. Although this does not affect traffic flow, you might see evidence of this when viewing certain types of system output.

The OneConnect profile settings

When configuring a OneConnect profile, you specify this information:

- Source mask

- The mask applied to the source IP address to determine the connection's eligibility to reuse a server-side connection.

- Maximum size of idle connections

- The maximum number of idle server-side connections kept in the connection pool.

- Maximum age before deletion from the pool

- The maximum number of seconds that a server-side connection is allowed to remain before the connection is deleted from the connection pool.

- Maximum reuse of a connection

- The maximum number of requests to be sent over a server-side connection. This number should be slightly lower than the maximum number of HTTP Keep-Alive requests accepted by servers in order to prevent the server from initiating a connection close action and entering the TIME_WAIT state.

- Idle timeout override

- The maximum time that idle server-side connections are kept open. Lowering this value may result in a lower number of idle server-side connections, but may increase request latency and server-side connection rate.

OneConnect and HTTP profiles

Content switching for HTTP requests

When you assign both a OneConnect profile and an HTTP profile to a virtual server, and an HTTP client sends multiple requests within a single connection, the BIG-IP system can process each HTTP request individually. The BIG-IP system sends the HTTP requests to different destination servers as determined by the load balancing method. Without a OneConnect profile enabled for the HTTP virtual server, the BIG-IP system performs load-balancing only once for each TCP connection.

HTTP version considerations

For HTTP traffic to be eligible to use the OneConnect feature, the web server must support HTTP Keep-Alive connections. The version of the HTTP protocol you are using determines to what extent this support is available. The BIG-IP system therefore includes a OneConnect transformations feature within the HTTP profile, specifically designed for use with HTTP/1.0 which by default does not enable Keep-Alive connections. With the OneConnect transformations feature, the BIG-IP system can transform HTTP/1.0 connections into HTTP/1.1 requests on the server side, thus allowing those connections to remain open for reuse.

The two different versions of the HTTP protocol treat Keep-Alive connections in these ways:

- HTTP/1.1 requests

- HTTP Keep-Alive connections are enabled by default in HTTP/1.1. With HTTP/1.1 requests, the server does not close the connection when the content transfer is complete, unless the client sends a Connection: close header in the request. Instead, the connection remains active in anticipation of the client reusing the same connection to send additional requests. For HTTP/1.1 requests, you do not need to use the OneConnect transformations feature.

- HTTP/1.0 requests

- HTTP Keep-Alive connections are not enabled by default in HTTP/1.0. With HTTP/1.0 requests, the client typically sends a Connection: close header to close the TCP connection after sending the request. Both the server and client-side connections that contain the Connection: close header are closed once the response is sent. When you assign a OneConnect profile to a virtual server, the BIG-IP system transforms Connection: close headers in HTTP/1.0 client-side requests to X-Cnection: close headers on the server side, thereby allowing a client to reuse an existing connection to send additional requests.

OneConnect and SNATs

When a client makes a new connection to a virtual server that is configured with a OneConnect profile and a source network address translation (SNAT) object, the BIG-IP system parses the HTTP request, selects a server using the load-balancing method defined in the pool, translates the source IP address in the request to the SNAT IP address, and creates a connection to the server. When the client's initial HTTP request is complete, the BIG-IP system temporarily holds the connection open and makes the idle TCP connection to the pool member available for reuse. When a new connection is initiated to the virtual server, the BIG-IP system performs SNAT address translation on the source IP address and then applies the OneConnect source mask to the translated SNAT IP address to determine whether it is eligible to reuse an existing idle connection.

OneConnect and NTLM profiles

NT Lan Manager (NTLM) HTTP 401 responses prevent the BIG-IP system from detaching the server-side connection. As a result, a late FIN from a previous client connection might be forwarded to a new client that re-used the connection, causing the client-side connection to close before the NTLM handshake completes. If you prefer NTLM authentication support when using the OneConnect feature, you should configure an NTLM profile in addition to the OneConnect profile.